Curve Fitting and Understanding

Three ways of improving understanding: Curve Fitting, Artificial Intelligence (AI), and Artificial General Intelligence (AGI): a not entirely monotonic scale of performance

A working paper to help me think through the categories of capabilities that align with each

Current technology seems to primarily consist of slick statistical correlations of the input dataset. The final result, even pretraining followed by finalizing, creates monolithic systems. I’m not seeing anything in the state of the art (Dec 2023) that is partitionable or modularizable

Lack of any sense of modularity precludes the information within the system from being partitioned and for the system to compare these partitions. The implication is that if the resulting system may incorporate invalid data it can’t be vettted/pruned

Reliable training data is an Achilles heel for these systems. I’m not seeing how they could begin to determine what’s a reliable data source. Most systems can’t run their own experiments, and vetting the experimental results (bad experiment, vs. falsifying data) leads to a similar garbage-in/garbage-out problem.

The situation is worse than this, since we’re starting to see system output quality decline as the input source data is becoming clogged with a significant quantity of “AI” generated text, much of which is hallucinatory.The lack of any significant input vetting capability forces the systems to fall back on performing a frequency weighted noise reduction analysis — which is ineffective when the hallucinations outnumber the realities. People are like that too, but they compensate by tuning out data sources that have proven unreliable. In fairness, people also require a ton of training to do this vetting half well, even with decades of training we can barely escape basic cognitive biases in our own fields.

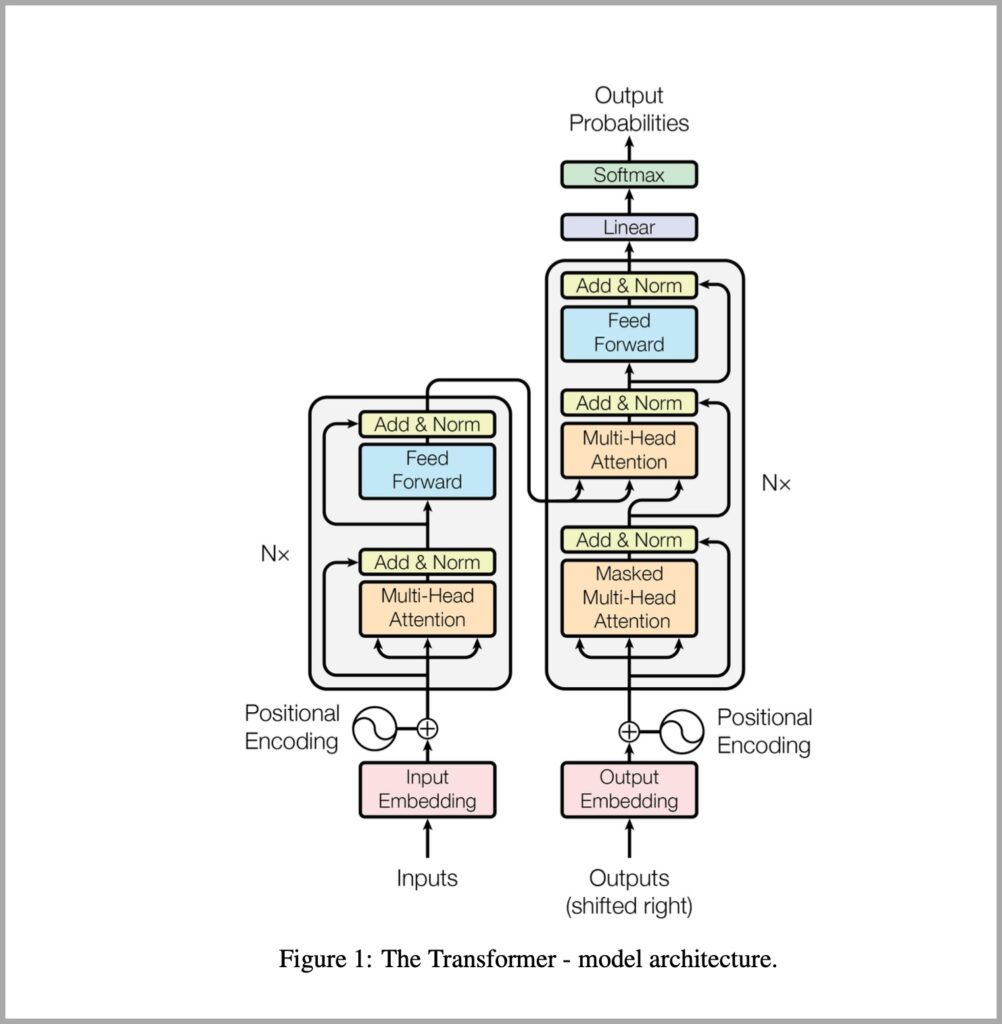

Current practice (late 2023) involves transformer models that are canonically depicted like this 1(from the Attention is All You Need paper Vaswani, Ashish et al. “Attention is All you Need.” Neural Information Processing Systems (2017).)

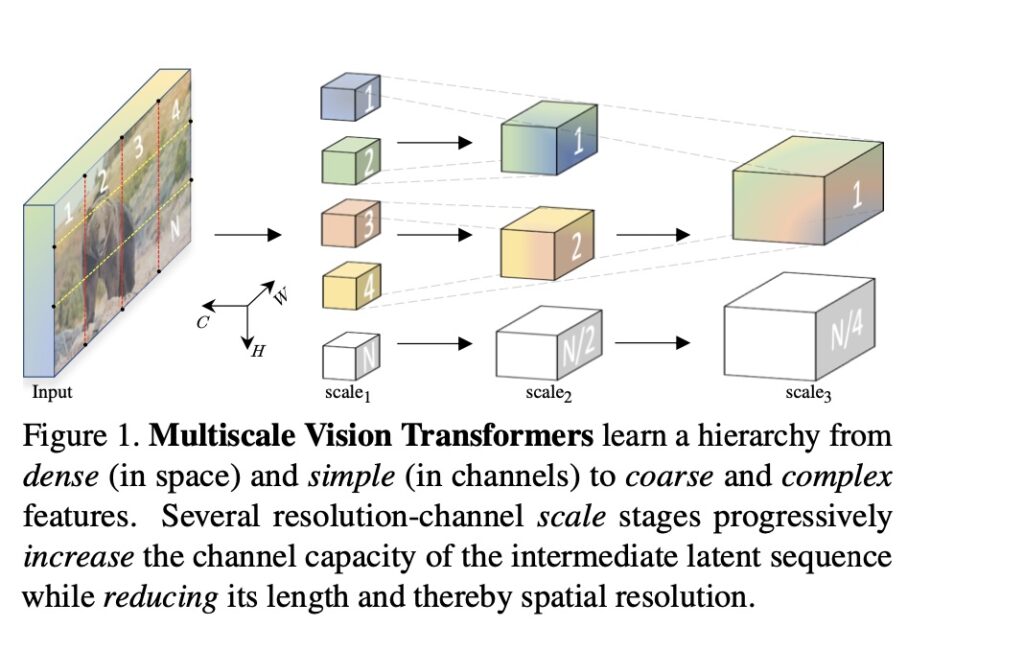

However, I’m also fond of this image which shows that the layers, and the heads paying attention to them also extend across multiple scales (of abstraction), from the Multiscale Vision Transformers paper 2( Fan, Haoqi et al. “Multiscale Vision Transformers.” 2021 IEEE/CVF International Conference on Computer Vision (ICCV) (2021): 6804-6815.)

Which more explicitly shows that at every layer of processing the “decision” on the value of each input is determined by a contextual analysis at multiple scales, in a manner induced by the training protocol

These drawings illustrate that multi-head attention architectures, which allow connections between all the nodes/layers of the networks, can form a complex web of correlations between all of the features in the entire training set. Considering that there are billions of connections in these network and the computational requirements for their training exceeds all but those of the largest players, “complex” is an understatement.

Note: Although the systems are visually presented in ways that don’t emphasize network layers and node positions c.f. There are number of different codings of position, embeddings of words that encode similarity e.g, SimLex-999 3it explicitly quantifies similarity rather than association or relatedness so that pairs of entities that are associated but not actually similar (Freud, psychology) have a low rating. input position, and (I believe layer))

Vetting

To work at web scale, systems need the ability to vet data sources and filter out those that lack sufficient quality. There currently are (obviously) methods for doing this semi-manually. However, success in even the near term, requires that systems vet their input with little manual intervention — since any system operating at (web) scale will undoubtedly be the target of incessant AI bot generated (I’m reminded of this old article by James Bridle on autogenerated content designed to bypass youtube filters 4https://medium.com/@jamesbridle/something-is-wrong-on-the-internet-c39c471271d2)

Data vetting is an unsolved problem overall, even manually at small scale, let alone automatically at web scale — it’s a superset of data cleaning which is damned difficult in and of itself. Data vetting, at its best, would have many of the characteristics of peer review, and as we’ve seen, given the number of high profile takedowns of fraudulent studies of late, humanity hasn’t completely solved that problem.

The immediate implication is a necessity to track the dependencies of the information active in the system: not only the input data but also their rollup and the imputations that were assisted by them. The system has to have a model of it’s model of the world, aka it has to be able to reason about it’s model of the world, which necessitates that it understand how models of the world work, which quickly gets you to developing an understanding of causality.

Which is hard. Not only is identifying the model hard (& a very meta task) but so is identifying the kind of causality that is being modeled vis a vis what may be required.

Causality

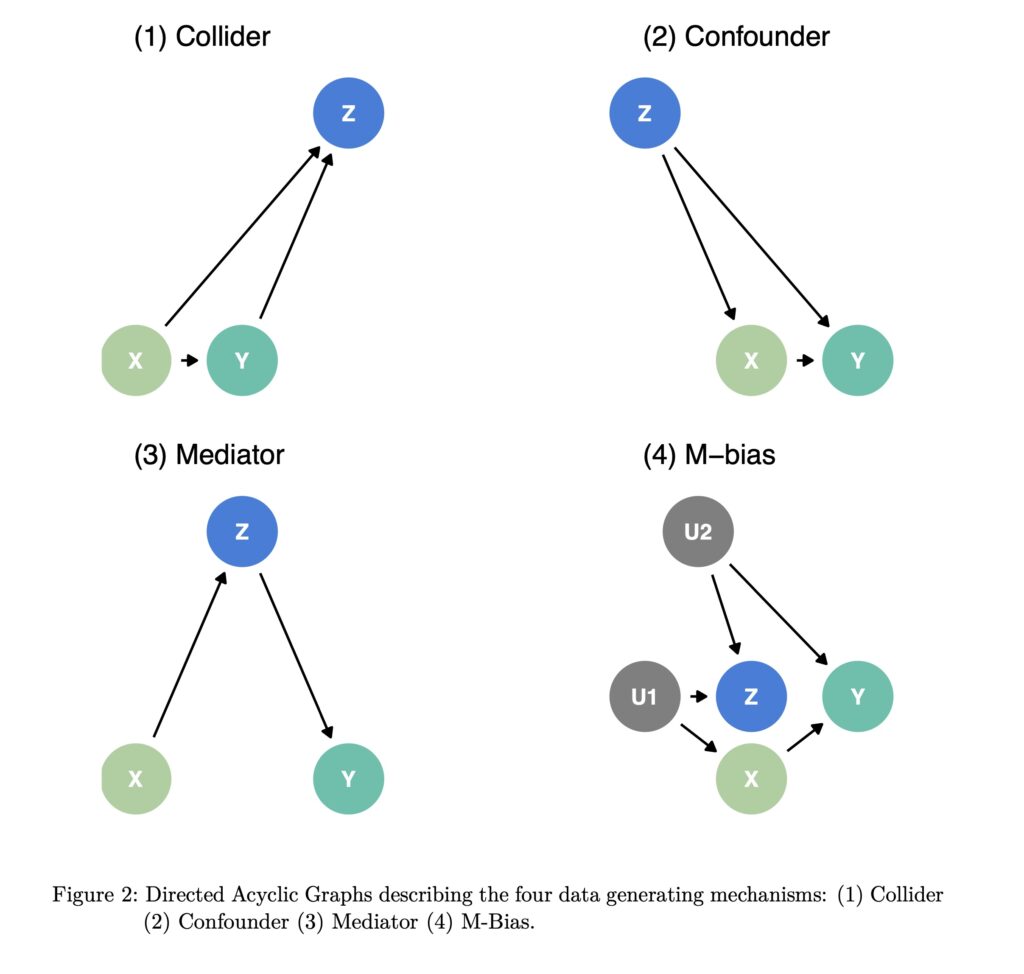

As an illustration of how difficult it can be to determine causal relationships I’m including an illustration from a paper by Lucy D’Agostino McGowan et. al. 5 Lucy D’Agostino McGowan, Travis Gerke & Malcolm Barrett (01 Dec 2023): Causal inference is not just a statistics problem, Journal of Statistics and Data Science Education (earlier version at https://arxiv.org/pdf/2304.02683v3.pdf)

The paper has four distributions (one for each mechanism) a version of an Anscombe quartet, which they describe as

Often used to teach introductory statistics courses, Anscombe created the quartet to illustrate the importance of visualizing data before drawing conclusions based on statistical analyses alone. Here, we propose a different quartet, where statistical summaries do not provide insight into the underlying mechanism, but even visualizations do not solve the issue.

Modularization

At this point the implications (& necessity) of a modularization mechanism becomes evident. After identifying and reasoning about the putative model, the requirement emerges to decouple it from the rest of the system and also how to group components of the model. Modularity is perhaps a bit stronger than what is actually required. Strict modularity implies that the item can be removed and replaced with another. The minimal requirement is separability: the piece can be removed/flagged as being incorrect without the whole artifice crashing down.

Pruning a chunk of false information seems inherently easier than adding a new chunk since the system could (& should) theoretically be able to track the influence of the chunk through the system, by monitoring the influence of the chunk on the nodes to which it is connected. Given this, the system might be able to at least reduce the confidence assigned to these locations. How hard one pushes on that idea is somewhat of an open question.

Conceptually you’d want to strengthen any cautions around the items that were highly influenced by discounted information. However, completely removing all impacted locations, even if only those above a certain threshold, would require an understanding of how it would impact overall system stability, which a priori seems hard. AFAIK there hasn’t been much success developing methods to incrementally update these systems either by adding new information or eliminating bad data.

If modularization/introspection mechanisms are in place that least raises the possibility of identifying multiple interrelated chunks of information and of looking at them abstractly. Abstracting and comparing the chunks gives one the opportunity to notice patterns between them: drawing analogies, and making extrapolations.

What’s interesting here is that it seems that transformers already kind of do this — as a number of people have noticed their omnipresent hallucinations do exhibit some of the characteristics of a let it all out brainstorming session. This might imply a loose opportunity for the discounting/flagging system described above.

Reliable Information

Of course, there are two other core ways of achieving reliable data. The first, and arguably the best, is the run the experiment/going out into the world and see for yourself. The second, which is always necessary and is certainly the most common approach that people use is to start off with some trusted sources, e.g., high quality peer reviewed journals. Again, not sure exactly how you do that at web scale in the presence of countermeasures.

It seems that if the system’s goal is to learn/hypothesize/test in a scientific environment the system could probably be set up to run some of the experiments itself. This of course assumes the system has a good grasp of the causality and control of automatic lab/test facilities. So, it’s not only difficult and expensive, but also could potentially cover only a small subset of the web scale information space.

The Dual Viewpoint

Given these General Intelligence goals of AGI and the structural shortcoming of existing systems, the dual question poses itself — what can these systems do now. (note: frequently the applications in the more localized domains discussed here refer to machine learning rather than large language models. The systems I’ll highlight here utilize large datasets relative to the domain)

Obviously a prerequisite for doing anything well, other than hallucinating, is that the data must be diligently vetted with a solid provenance. For the systems discussed below which don’t work at web scale and are focussed on a specific area, this proved a tractable requirement.

There’s at least three examples of transformative results from transformer (and other “neural network” big data) models, when they’re used in areas conceptually close to their training set (conceptually close ~= I could imagine an isomorphism between the two). That said, two of the three still required some domain dependent modifications to tailor them to the task. They also did not (& did not intend to) address the modularity issues.

I’m not going to summarize the papers so much as to excerpt highlights and lightly comment on the results

Protein Structure Approximation

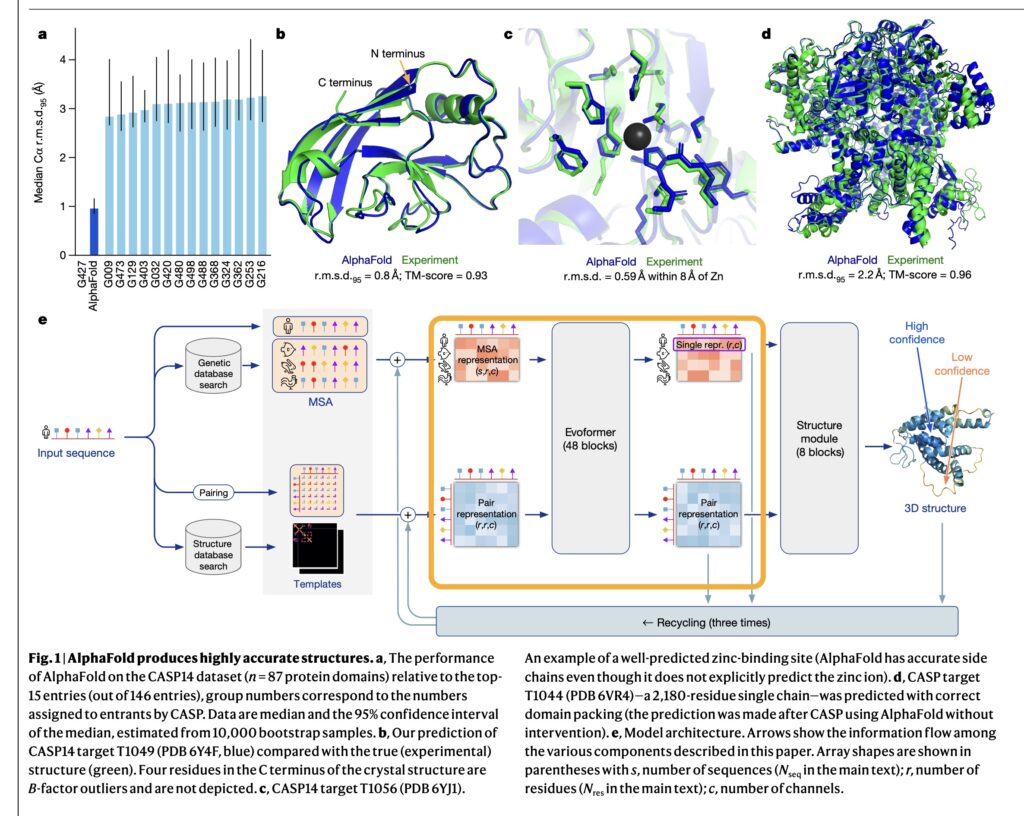

An early (2021) one is AlphaFold which was shown to be highly accurate in predicting 3-d protein structures when tested against the CASP14 benchmark.

From the paper

AlphaFold greatly improves the accuracy of structure prediction by incorporating novel neural network architectures and training procedures based on the evolutionary, physical and geometric constraints of protein structures. In particular, we demonstrate a new architecture to jointly embed multiple sequence alignments (MSAs) and pairwise features, a new output representation and associated loss that enable accurate end-to-end structure prediction 6Jumper, John M. et al. “Highly accurate protein structure prediction with AlphaFold.” Nature 596 (2021): 583 – 589.

AlphaFold is not an out of the box transformer model. It incorporated two sequence specific modifications (outlined in orange in the figure), consisting of:

- Multiple Sequence Alignment module and a

- Pairwise evolutionary correlator,

both of which are specific to identifying biologically similar sequences.

Summary: AlphaFold can be thought of as a statistical heuristic predictor of protein structure. It isn’t a quantum simulation of the protein but in many instances the prediction is extremely close to experimentally based structural data. Since, given our currently relatively primitive level of compute power, heuristic predictions is all we can do at any reasonable scale, in many cases it the best result currently available.

However, as with any statistical estimation, the results are often best when the question is similar to the source data. Given the size of these models and their opacity it’s always best to do a bit of checking before assuming the result is accurate. 7Terwilliger, Thomas C. et al. “AlphaFold predictions are valuable hypotheses, and accelerate but do not replace experimental structure determination.” bioRxiv (2023):

This isn’t really a significant caveat in that, even if you had a completely accurate experimental measurement of the protein structure, the actual structure is sensitive to the protein’s environment so it requires in situ verification in either case.

Material Discovery

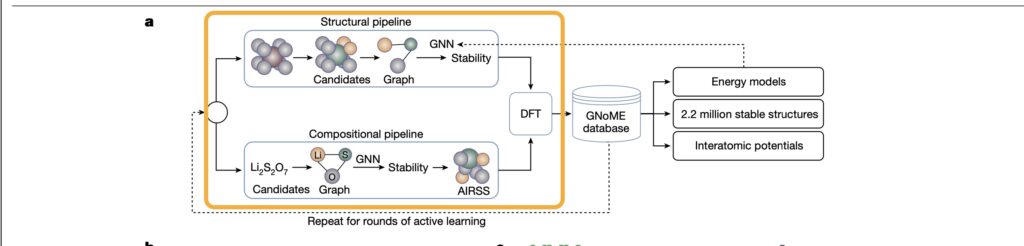

Scaling deep learning for materials discovery 8Merchant, Amil et al. “Scaling deep learning for materials discovery.” Nature 624 (2023): 80 – 85.

which uses E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials9Batzner, Simon L. et al. “E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials.” Nature Communications 13 (2021):

This work presents Neural Equivariant Interatomic Potentials (NequIP), an E(3)-equivariant neural network approach for learning interatomic potentials from ab-initio calculations for molecular dynamics simulations.

So this a very task/model specific architecture

Summary: This paper is especially interesting in that the network model used performs very accurate an initio calculations of material stability, which is practical since the task is discovery of potentially stable crystal structures.

The charter is to find undiscovered materials that would form stable crystals if they were created (the synthesis of the crystals isn’t addressed). The network is very specialized using a Graph Neural Network (GNN) as part of a pipeline that performs very accurate an initio calculations of stability, so the stability of the crystal is extremely likely if it could be synthesized— this is a case in which the isomorphic distance between the model and the actual is very, very close

Antibody Optimization

Hie, B.L., Shanker, V.R., Xu, D. et al. Efficient evolution of human antibodies from general protein language models. 10Nat Biotechnol (2023). https://doi.org/10.1038/s41587-023-01763-2

Here we report that general protein language models can efficiently evolve human antibodies by suggesting mutations that are evolutionarily plausible, despite providing the model with no information about the target antigen, binding specificity or protein structure

We performed language-model-guided affinity maturation of seven antibodies, screening 20 or fewer variants of each antibody across only two rounds of laboratory evolution, and improved the binding affinities of four clinically relevant, highly mature antibodies up to sevenfold and three unmatured antibodies up to 160-fold, with many designs also demonstrating favorable thermostability and viral neutralization activity against Ebola and severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) pseudoviruses.

Summary: Noteworthy in that it used a very general model, but in a very local, very isomorphic task, that of suggesting point mutations to existing antibody sequences to improve their efficacy. The results were strikingly good. Providing solid starting points for exploration which very quickly achieved significant improvements — even in antibodies that had been recently developed commercially, e.g., Paxlovid

Extremely noteworthy that they did it on about $10K of hardware

Moreover, our affinity improvements on unmatured antibodies are within the 2.3-fold to 580-fold range previously achieved by a state-of-the-art, in vitro evolutionary system applied to unmatured, anti-RBD nanobodies (in which the computational portion of our approach, which takes seconds, is replaced with rounds of cell culture and sorting, which take weeks)

Overall Summary

Specialized structures on top of the base large language model are currently (December 2023) necessary to enable generalizations into areas that involve more of an isomorphic leap. However, what constitutes a large isomorphic distance is hard to characterize, because there may be statistical regularities in the training data that the models can elucidate that are actually extensible, but were unknown prior to examination by the model’s training procedure.

What is most assuredly true is that none of the example systems would’ve succeeded if they had bad data to start with, as they certainly had no in-system facility for dismissing bad data beyond filtering statistical noise in the training set. Although most of the systems were modified to give them enhanced capability for computing domain specific cost functions, None of them also had any kind of modularity for generalization, or incremental learning.

The characteristics of vetting, intra-system comparisons, and incrementality seem at best underdeveloped, at worst nonexistent. I wouldn’t say it’s impossible that the architectures couldn’t be modified to perform these things, but they’re certainly not part of the structure as I understand them, and would not be easy to Incorporate without major revisions

Leave a Reply